[ad_1]

Largest Contentful Paint (LCP) is the period of time it takes to load the only largest seen factor within the viewport. It represents the web site being visually loaded and is likely one of the three Core Internet Vitals (CWV) metrics Google makes use of to measure web page expertise.

The primary impression customers have of your website is how briskly it seems to be loaded.

The most important factor is normally going to be a featured picture or perhaps the <h1> tag. However it is also any of these:

- <img> factor

- <picture> factor inside an <svg> factor

- Picture inside a <video> factor

- Background picture loaded with the url() perform

- Blocks of textual content

<svg> and <video> could also be added sooner or later.

Something outdoors the viewport or any overflow just isn’t thought of when determining the dimensions. If a picture occupies your complete viewport, it’s not thought of for LCP.

Let’s take a look at how briskly your LCP needs to be and enhance it.

LCP worth is lower than 2.5 seconds and needs to be based mostly on Chrome Person Expertise Report (CrUX) knowledge. That is knowledge from precise customers of Chrome who’re in your website and have opted in to sharing this data. You want 75% of web page visits to load in 2.5 seconds or much less.

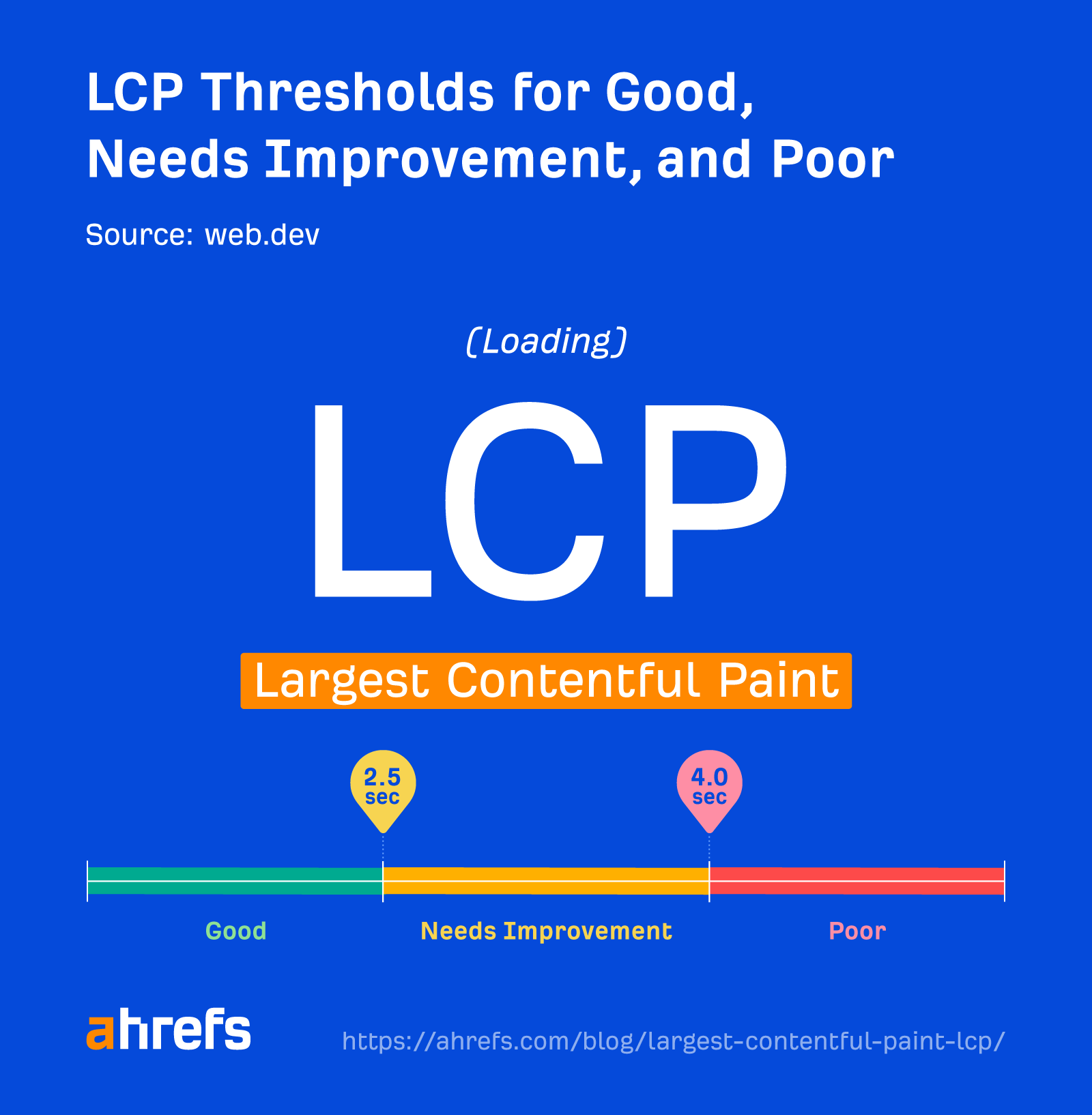

Your web page could also be categorised into one of many following buckets:

- Good: <=2.5s

- Wants enchancment: >2.5s and <=4s

- Poor: >4s

LCP knowledge

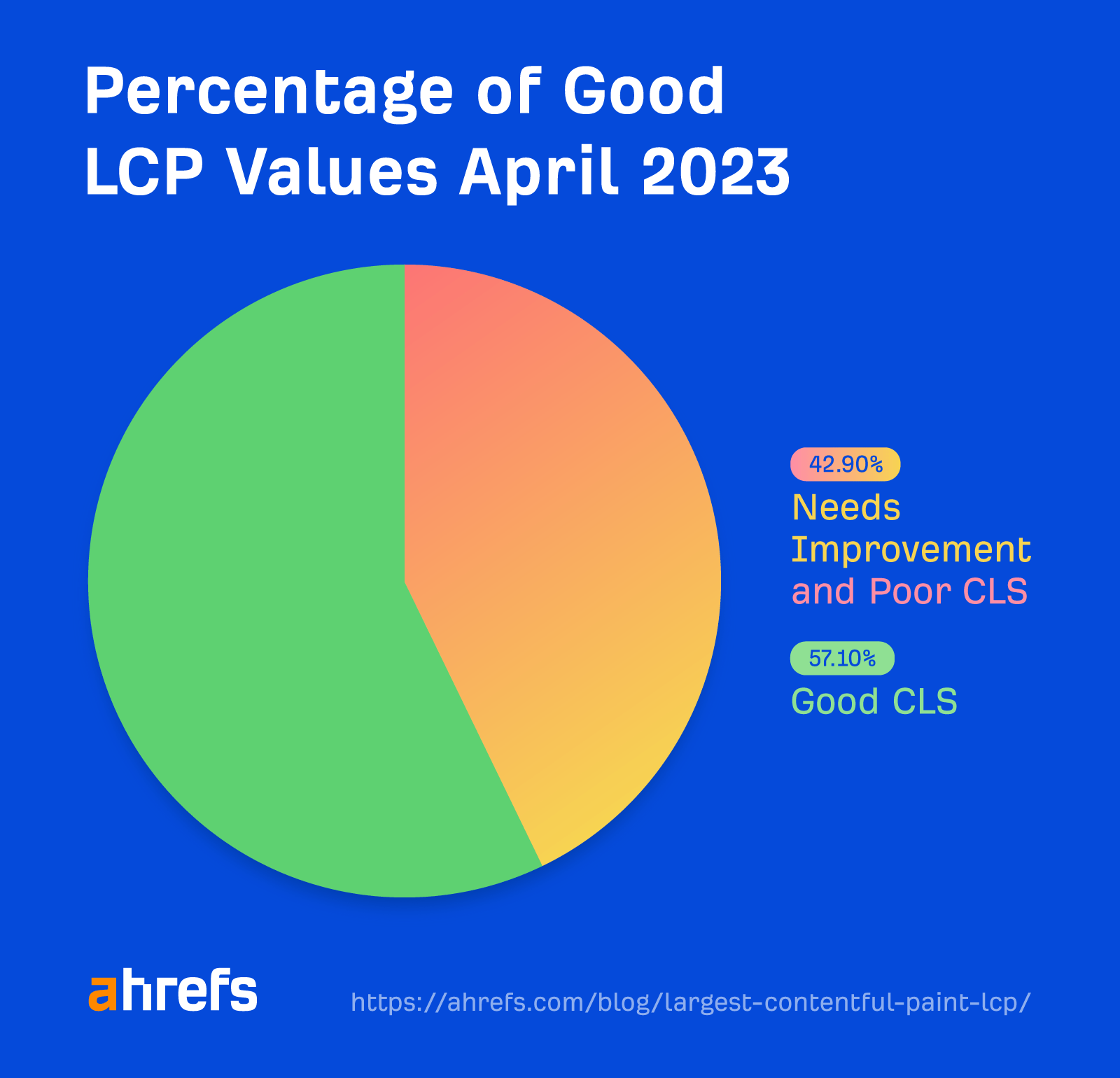

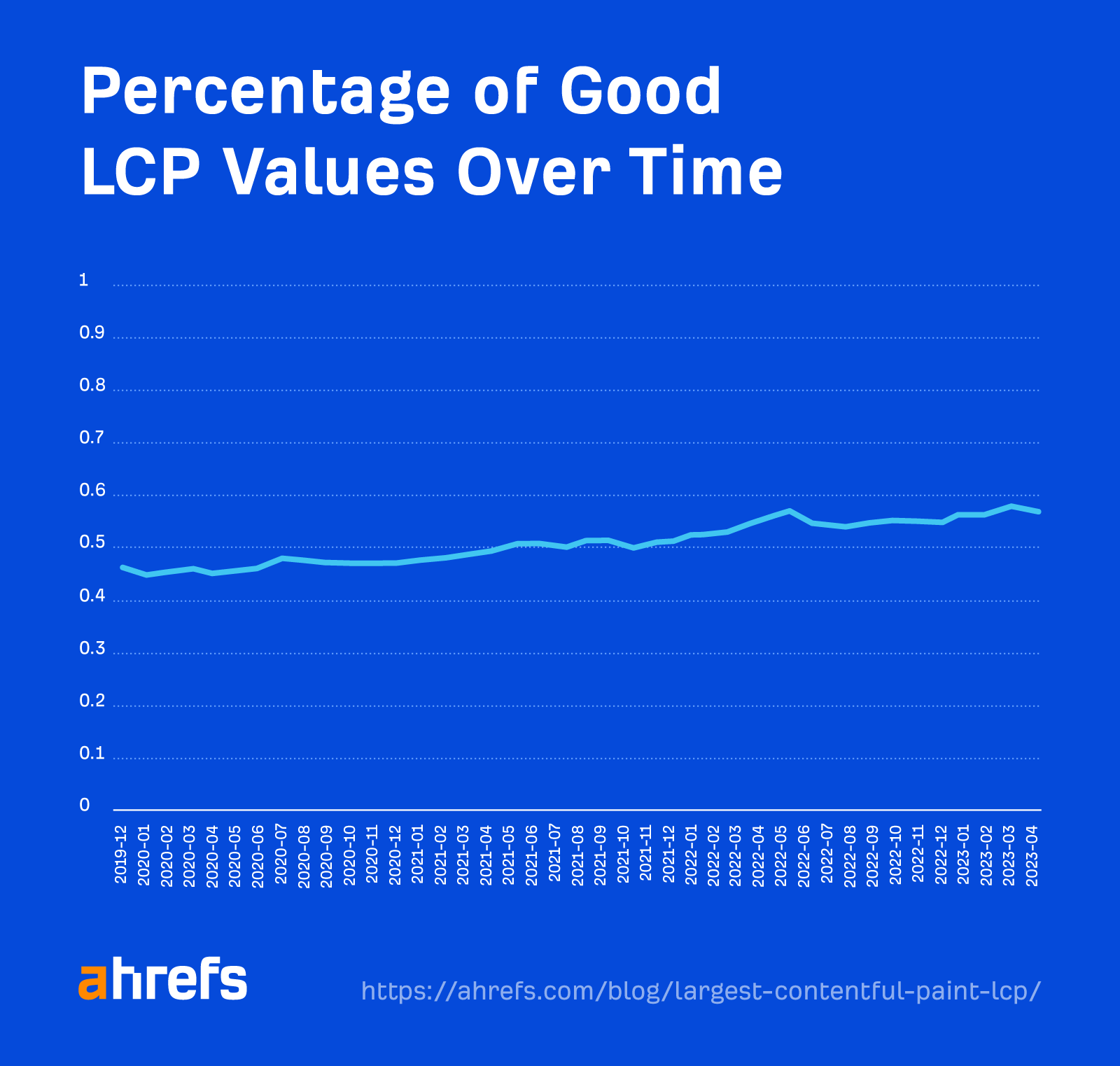

57.1% of web sites are within the good LCP bucket as of April 2023. That is averaged throughout the location. As we talked about, you want 75% of web page visits to load in 2.5 seconds or much less to point out as “good” right here.

LCP is the Core Internet Important that individuals are struggling probably the most to enhance.

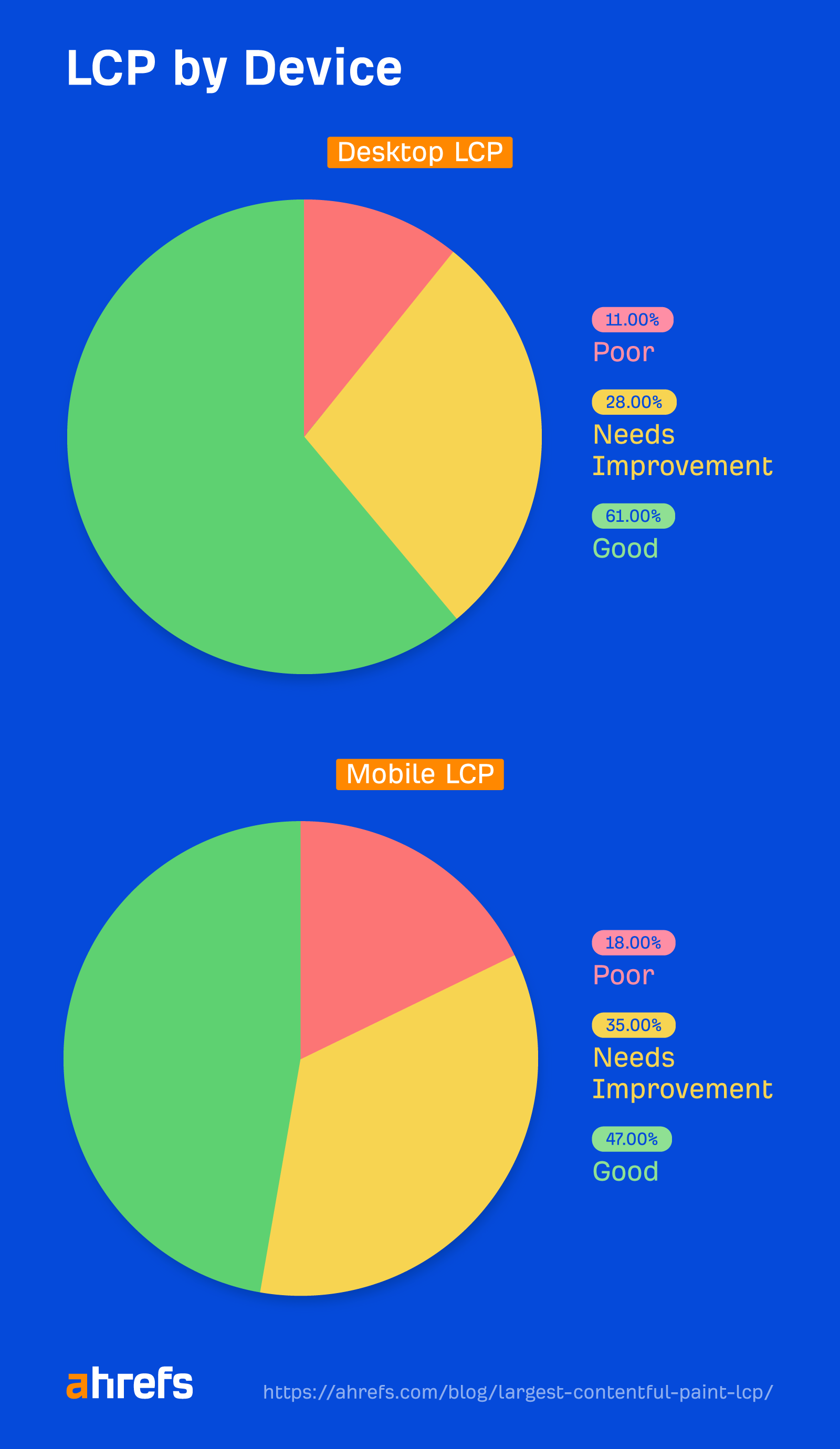

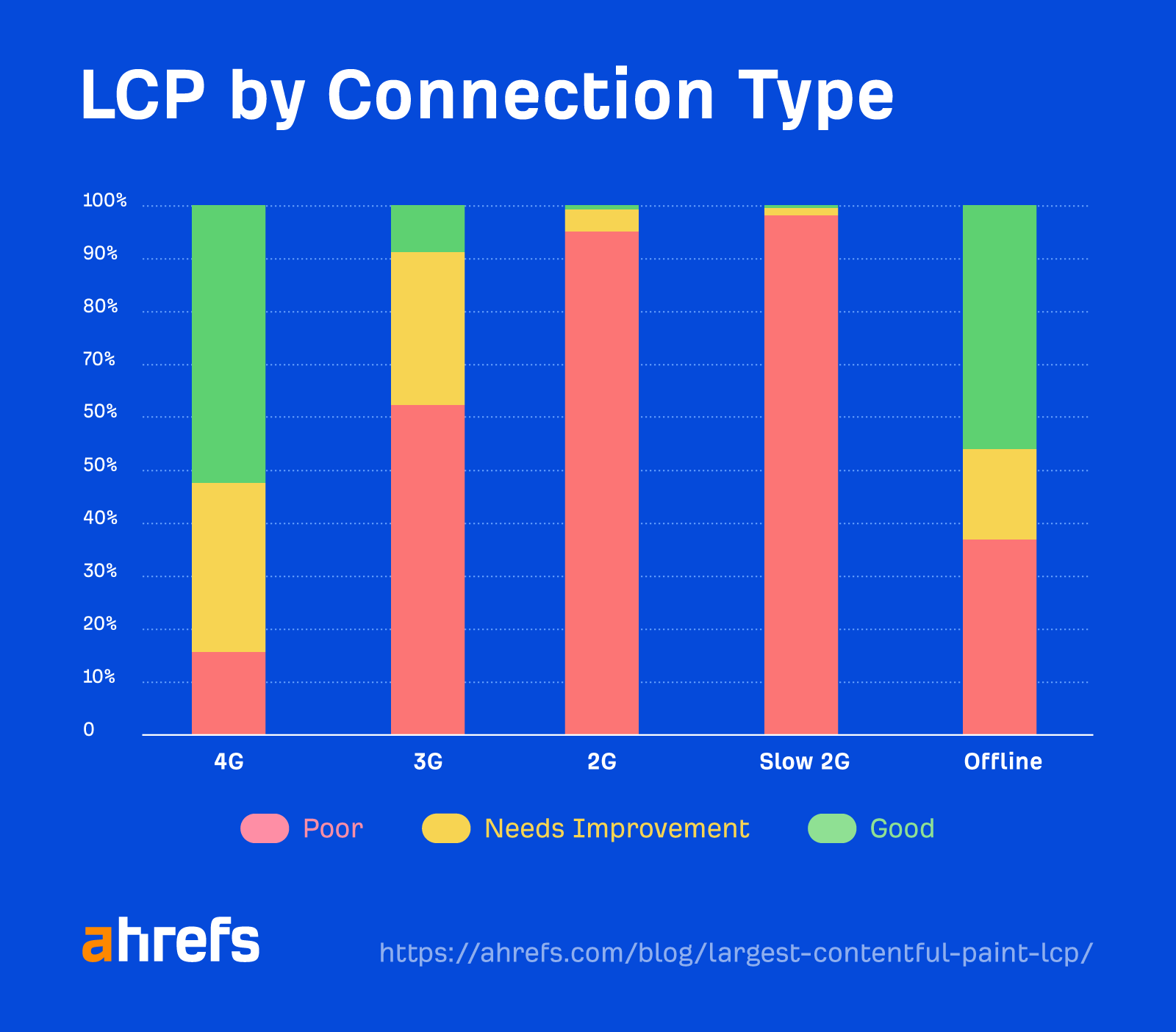

Once we ran a study on Core Web Vitals, we noticed that pages are much less more likely to have good LCP values on cell units in comparison with desktop.

The LCP threshold appears nearly unimaginable to go on slower connections.

There are a few alternative ways of measuring LCP you’ll wish to take a look at: area knowledge and lab knowledge.

Subject knowledge comes from the Chrome User Experience Report (CrUX), which is knowledge from actual customers of Chrome who select to share their knowledge. This provides you the very best thought of real-world LCP efficiency throughout totally different community situations, units, caching, and so on. It’s additionally what you’ll truly be measured on by Google for Core Internet Vitals.

Lab knowledge relies on exams with the identical situations to make exams repeatable. Google doesn’t use this for Core Internet Vitals, but it surely’s helpful for testing as a result of CrUX/area knowledge is a 28-day rolling common, so it takes some time to see the influence of adjustments.

The most effective device to measure LCP will depend on the kind of knowledge you need (lab/area) and whether or not you need it for one URL or many.

Measuring LCP for a single URL

Pagespeed Insights pulls page-level area knowledge that you may’t in any other case question within the CrUX dataset. It additionally runs lab exams for you based mostly on Google Lighthouse and provides you origin knowledge so you’ll be able to evaluate web page efficiency to your complete website.

Measuring LCP for a lot of URLs or a whole website

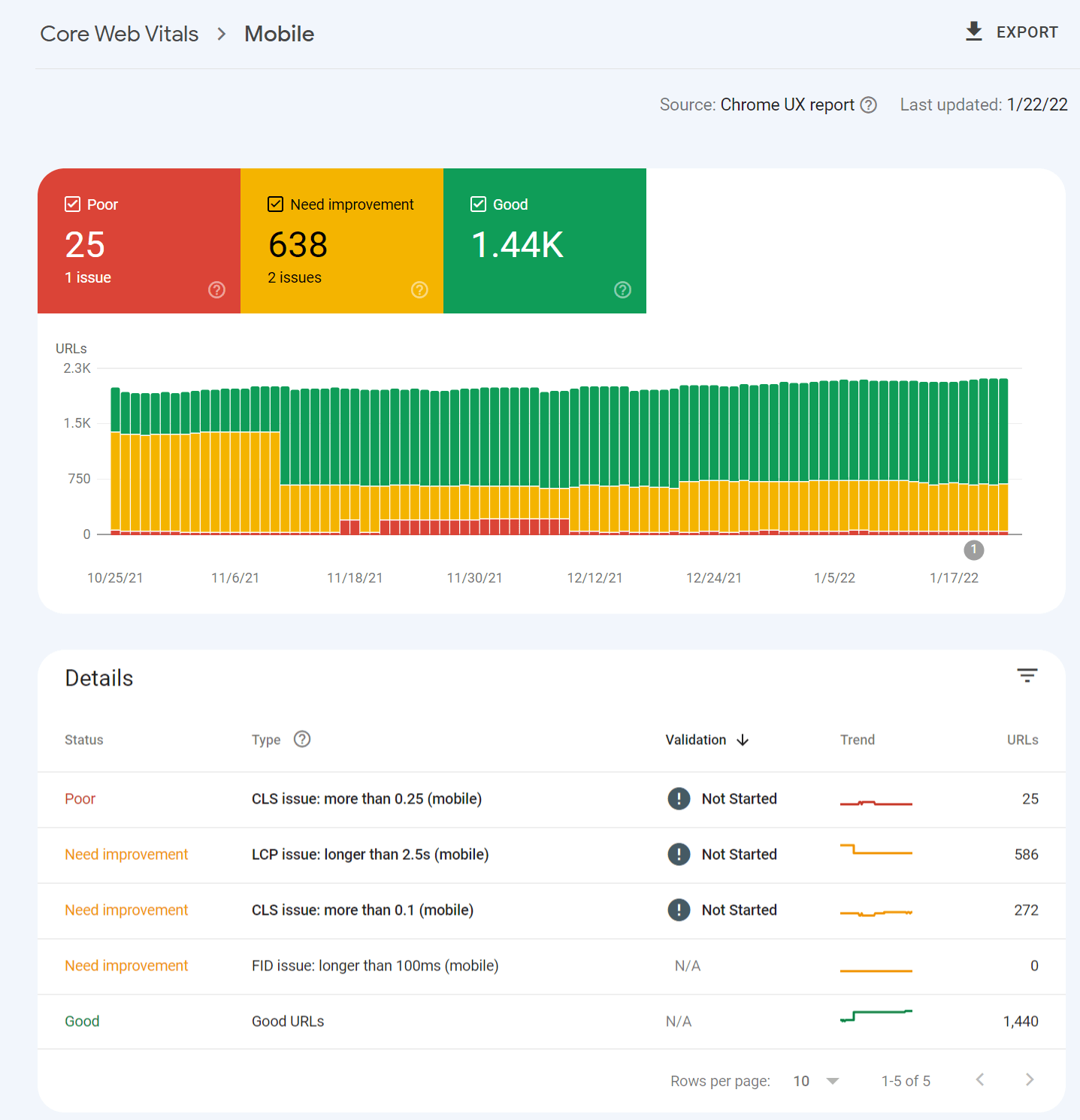

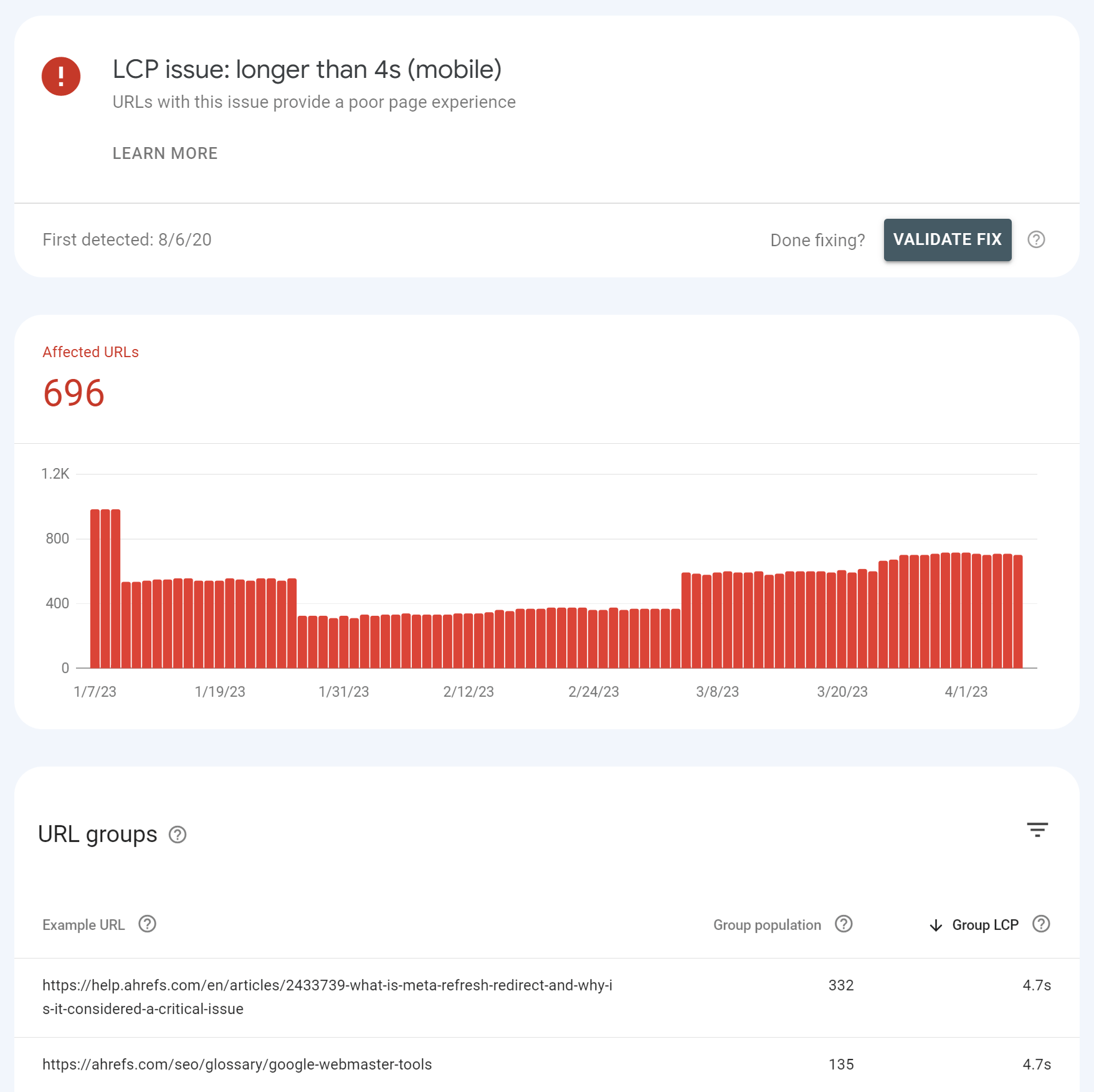

You will get CrUX knowledge in Google Search Console that’s bucketed into the classes of excellent, wants enchancment, and poor.

Clicking into one of many points provides you a breakdown of web page teams which can be impacted. The teams are pages with related values that seemingly use the identical template. You make the adjustments as soon as within the template, and that will likely be fastened throughout the pages within the group.

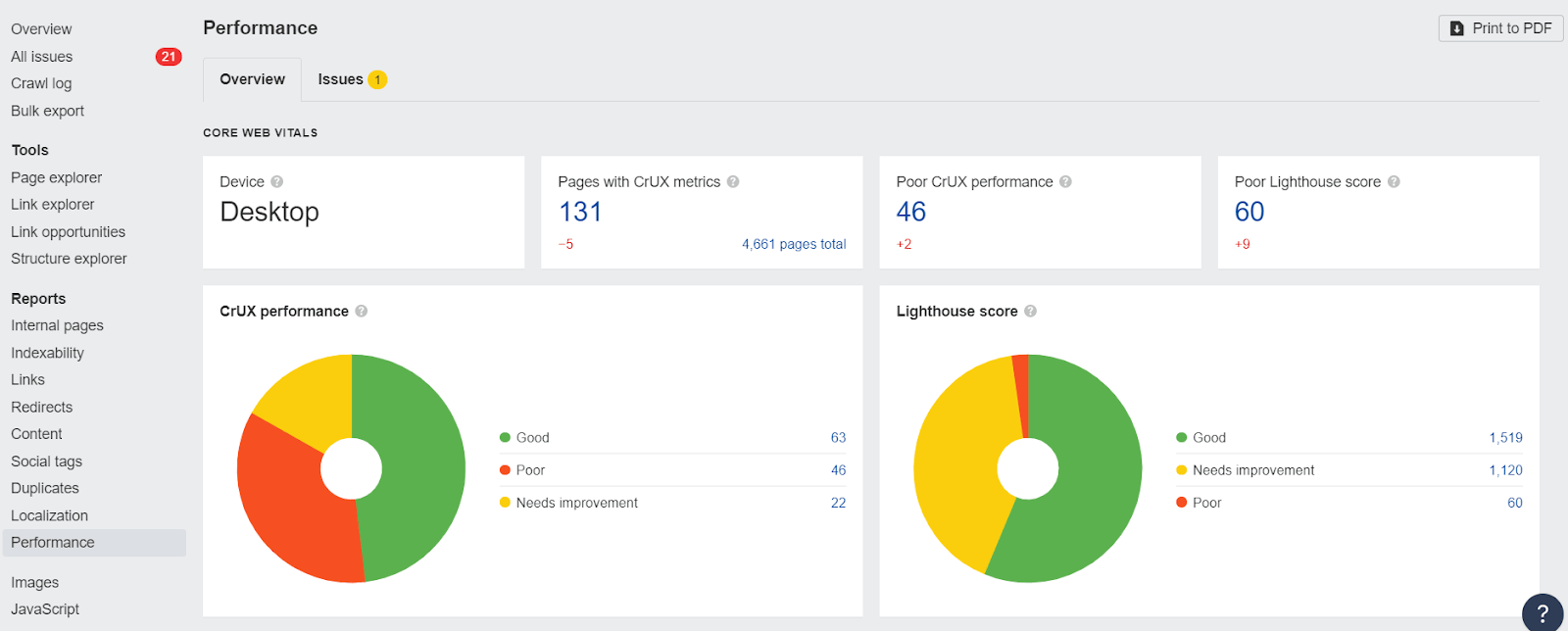

If you need each lab knowledge and area knowledge at scale, the one technique to get that’s by way of the PageSpeed Insights API. You possibly can connect with it simply with Ahrefs’ Site Audit and get experiences detailing your efficiency. That is free for verified websites with an Ahrefs Webmaster Tools (AWT) account.

Observe that the Core Internet Vitals knowledge proven will likely be decided by the user-agent you choose in your crawl in the course of the setup. When you crawl from cell, you’ll get cell CWV values from the API.

In PageSpeed Insights, the LCP factor will likely be specified within the “Diagnostics” part. Additionally, discover there’s a tab to pick out LCP that may solely present points associated to LCP. These are the problems seen within the lab take a look at that you just’ll wish to clear up.

There are a variety of points that relate to LCP, making it the toughest metric to enhance.

The final concept sounds simple sufficient. Give me the biggest factor quicker. However in follow, this will get pretty advanced. Some information might require others to be loaded first, or there could also be conflicting priorities within the browser. You might repair a bunch of points with out truly seeing an enchancment, which could be irritating.

When you’re not very technical and don’t wish to rent somebody, search for efficiency or web page velocity optimization plugins, modules, or packages for no matter system you’re utilizing. You need to use the under data as a information for what options it’s possible you’ll want.

Listed here are a number of methods you’ll be able to enhance LCP:

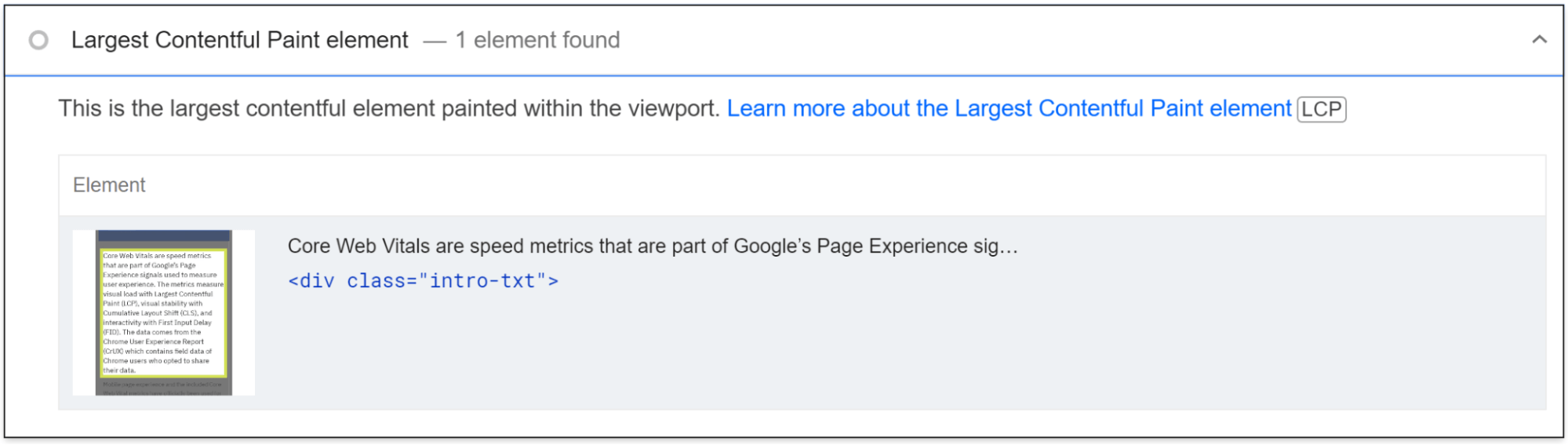

1. Discover your LCP factor

In PageSpeed Insights, you’ll be able to click on “Largest Contentful Paint factor” within the “Diagnostics” part, and it’ll establish your LCP factor.

2. Prioritize loading of assets

To go the LCP examine, you need to prioritize how your assets are loaded within the vital rendering path. What I imply by that’s you wish to rearrange the order through which the assets are downloaded and processed.

It’s best to first load the assets wanted in your LCP factor and every other assets wanted for the content material above the fold. After the initially seen parts are loaded for customers, the remainder are then loaded.

Many websites can get to a passing time for LCP by simply including some early hints or preload statements for issues like the principle picture, in addition to obligatory stylesheets and fonts. Let’s take a look at optimize the varied useful resource varieties.

Photographs early

When you don’t want the picture, probably the most impactful answer is to easily do away with it. When you should have the picture, I recommend optimizing the dimensions and high quality to maintain it as small as attainable.

You may also use Early Hints. Fetchpriority=”excessive” can be utilized on <img> or <hyperlink> tags and tells browsers to get the picture early. This implies it’s going to show slightly earlier.

Early Hints don’t work on all browsers, so you may additionally wish to preload the picture. That is going to start out the obtain of that picture slightly earlier, however not fairly as early as fetchpriority=”excessive”.

A preload assertion for a responsive picture appears to be like like this:

<hyperlink rel="preload" as="picture" href=“cat.jpg"

imagesrcset=“cat_400px.jpg 400w,

cat_800px.jpg 800w, cat_1600px.jpg 1600w"

imagesizes="50vw">

You possibly can even use fetchpriority=”excessive” and preload collectively!

Photographs late

It’s best to lazy load any pictures that you just don’t want instantly. This hundreds pictures later within the course of or when a consumer is near seeing them. You need to use loading=“lazy” like this:

<img src=“cat.jpg" alt=“cat" loading="lazy">

Don’t lazy load pictures above the fold!

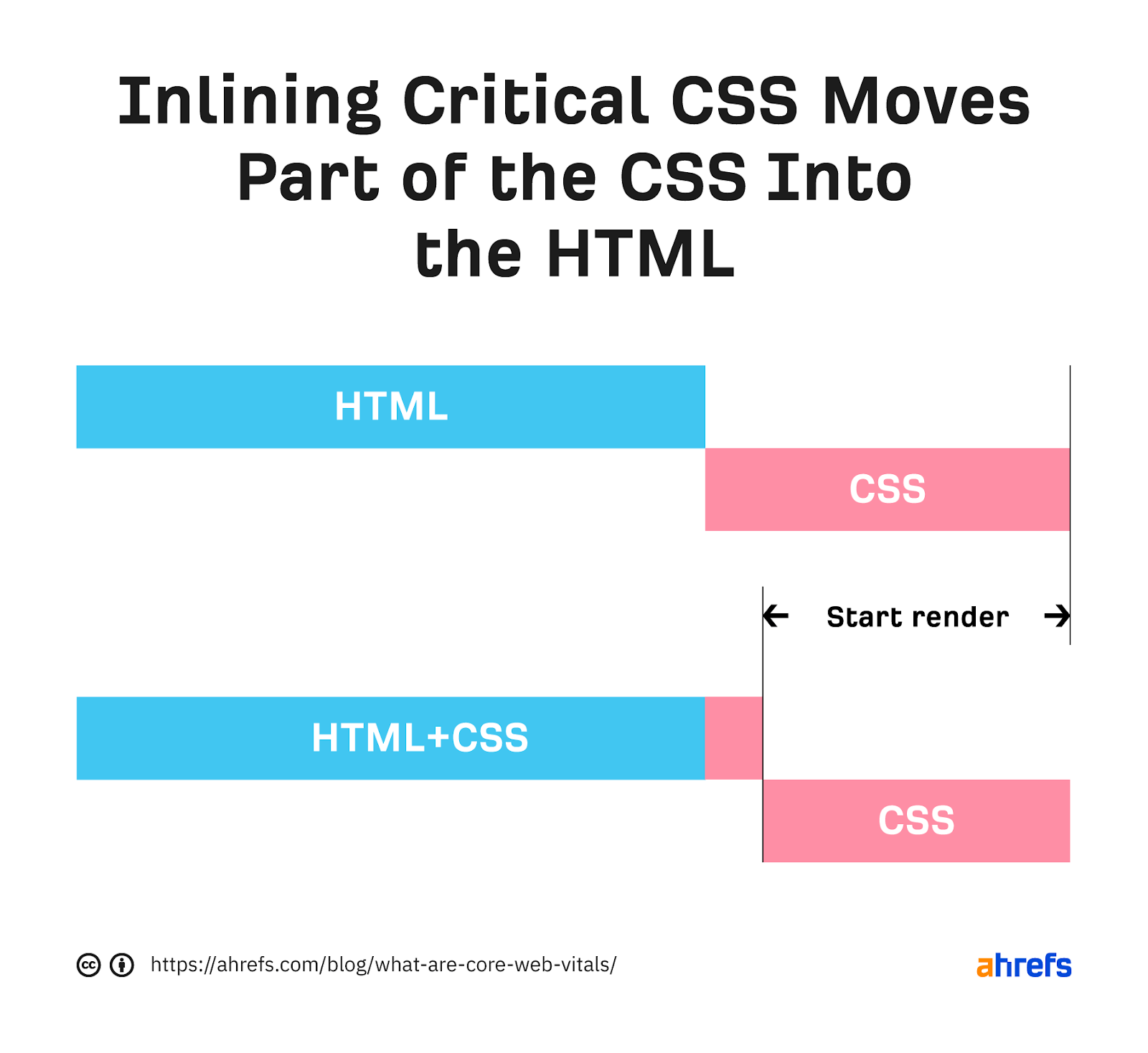

CSS early

We already talked about eradicating unused CSS and minifying the CSS you have.

The opposite main factor you need to do is to inline vital CSS. What this does is it takes the a part of the CSS wanted to load the content material customers see instantly after which applies it straight into the HTML. When the HTML is downloaded, all of the CSS wanted to load what customers see is already out there.

CSS late

With any further CSS that isn’t vital, you’ll wish to apply it later within the course of. You possibly can go forward and begin downloading the CSS with a preload assertion however not apply the CSS till later with an onload occasion. This appears to be like like:

<hyperlink rel="preload" href="https://ahrefs.com/weblog/largest-contentful-paint-lcp/stylesheet.css" as="fashion" onload="this.rel="stylesheet"">

Fonts

I’m going to present you a number of choices right here:

Good: Preload your fonts. Even higher in case you use the identical server to do away with the connection.

Higher: Font-display: optional. This may be paired with a preload assertion. That is going to present your font a small window of time to load. If the font doesn’t make it in time, the preliminary web page load will merely present a default font. Your customized font will then be cached and present up on subsequent web page hundreds.

Finest: Simply use a system font. There’s nothing to load—so no delays.

JavaScript early

We already talked about eradicating unused JavaScript and minifying what you may have. When you’re utilizing a JavaScript framework, then it’s possible you’ll wish to prerender or server-side render (SSR) the web page.

You may also inline the JavaScript wanted early. This works the identical method as was described within the CSS part, the place you progress parts out of your JavaScript information to as an alternative be loaded with the HTML.

An alternative choice is to preload the JavaScript information so that you just get them earlier. This could solely be finished for belongings wanted to load the content material above the fold or if some performance will depend on this JavaScript.

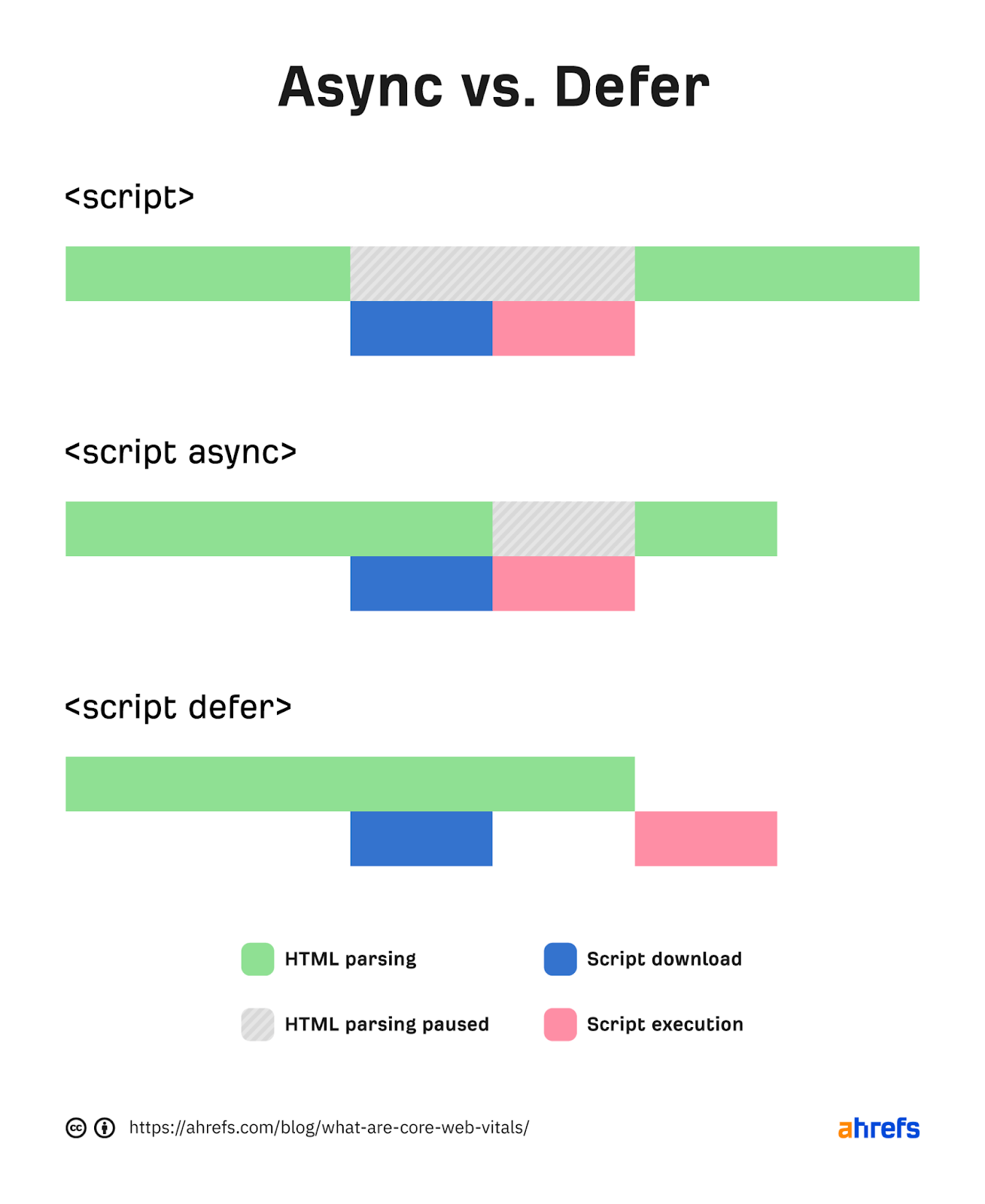

JavaScript late

Any JavaScript you don’t want instantly needs to be loaded later. There are two principal methods to do this—defer and async attributes. These attributes could be added to your script tags.

Often, a script being downloaded blocks the parser whereas downloading and executing. Async will let the parsing and downloading happen on the identical time however nonetheless block parsing in the course of the script execution. Defer won’t block parsing in the course of the obtain and solely execute after the HTML has completed parsing.

Which must you use? For something that you really want earlier or that has dependencies, I’d lean towards async.

For example, I have a tendency to make use of async on analytics tags in order that extra customers are recorded. You’ll wish to defer something that isn’t wanted till later or doesn’t have dependencies. The attributes are fairly simple to add.

Try these examples:

Regular:

<script src="https://www.area.com/file.js"></script>

Async:

<script src="https://www.area.com/file.js" async></script>

Defer:

<script src="https://www.area.com/file.js" defer></script>

3. Make information smaller

When you can do away with any information or scale back their sizes, then your web page will load quicker. This implies it’s possible you’ll wish to delete any information not getting used or elements of the code that aren’t used.

The way you go about it will rely rather a lot in your setup, however the course of for eradicating unneeded elements of information is normally known as tree shaking.

That is generally finished through an automatic course of with Webpack or Grunt with JavaScript frameworks or generally even techniques like WordPress, however commonest CMS techniques might not help this.

You might wish to skip this or see if there are any plugins which have this selection in your system.

To make the information smaller, you usually wish to compress them.

Just about each file sort used to construct your web site could be compressed, together with CSS, JavaScript, Photographs, and HTML. Additionally, practically each system and server help compression.

It’s normally finished on the server or CDN stage, however some plugins help this like WP Rocket for WordPress.

4. Serve information nearer to customers

Info takes time to journey. The additional you might be from a server, the longer it takes for the information to be transferred. Except you serve a small geographical space, having a Content Delivery Network (CDN) is an effective thought.

CDNs offer you a technique to join and serve your website that’s nearer to customers. It’s like having copies of your server in several areas across the world.

5. Host assets on the identical server

While you first connect with a server, there’s a course of that navigates the net and establishes a safe connection between you and the server.

This takes a while, and every new connection that you must make provides further delay whereas it goes by way of the identical course of. When you host your assets on the identical server, you’ll be able to eradicate these further delays.

When you can’t use the identical server, it’s possible you’ll wish to use preconnect or DNS-prefetch to start out connections earlier. A browser will usually await the HTML to complete downloading earlier than beginning a connection. However with preconnect or DNS-prefetch, it begins sooner than it usually would. Do observe that DNS-prefetch has higher help than preconnect.

For every useful resource you wish to get early, you add a brand new assertion like:

<hyperlink rel="preconnect" href="https://fonts.googleapis.com/">

<hyperlink rel="dns-prefetch" href="https://fonts.googleapis.com/" />

6. Use caching

While you cache assets, they’re downloaded for the primary web page view however don’t have to be downloaded for subsequent web page views. With the assets already out there, further web page hundreds will likely be a lot quicker.

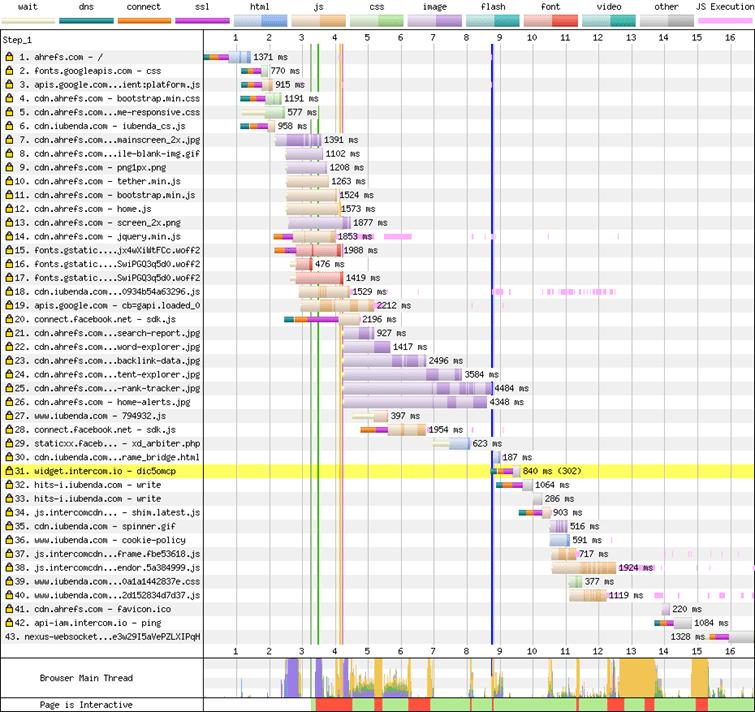

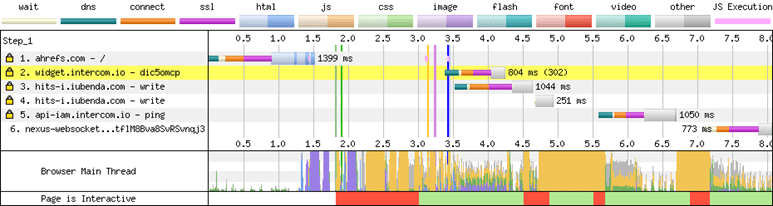

Try how few information are downloaded within the second web page load within the waterfall charts under.

First load of the web page:

Second load of the web page:

When you can, cache on a CDN as properly. Your cache time needs to be so long as you might be snug with.

A perfect setup is to cache for a very lengthy time period however purge the cache whenever you make a change to a web page.

7. Misc

There are a number of different applied sciences that you could be wish to take a look at to assist with efficiency. These embody Speculative Prerendering, Signed Exchanges, and HTTP/3.

Remaining ideas

Is there a greater metric to measure seen load? I don’t see something new on the horizon presently. We’ve already seen a number of evolutions making an attempt to measure the load.

Load and DOMContentLoaded don’t actually inform you what a consumer sees. First Contentful Paint (FCP) is the start of the loading expertise. First Which means Paint (FMP) and Pace Index (SI) are advanced and don’t actually establish when the principle content material has been loaded.

If in case you have any questions, message me on Twitter.

[ad_2]